Intro

Following a glorious review by a guy on Delphipraxis I decided to test Claude Code which was sold there as the ultimate productivity tool for Delphi (1h of development with Claude valuing as much as a whole day of alone programming). With hopes low (from my previous experience with AIs), I decided to perform my own tests.

I spent A LOT of time testing Claude and the article got large. Maybe too large. But besides the setup and tests you can find also a lot of useful commands and tricks about Claude. If it is too long, and you want to TLDR, here is the conclusion:

1. If you are a beginner in Delphi with less than 1 year experience, or you never learned the language properly, Claude can be gold.

2. If you are an average Delphi programmer, Claude might help you in some tasks but it might stay in your way in others.

3. If you are a Delphi gray beard, don’t waste your time. Overal, on real-world projects, without any doubt, Claude will massively decrease your productivity.

(You could still use it for tiny or legacy projects).

I think people that oversell Claude still don’t master the language. In this case, yes, Claude can help them.

____________________________

Preparations

Step 1. Install WSL, Linux and NodeJS

Time: 20 minutes. Ease: 5/5 stars

Claude is made for Linux so there will be a few hick-ups to make it work on Windows via WSL. The process is explained here.

Note: Honestly, I kinda expected this to be also for Windows. From what I remember Windows still takes 72-75% of the desktop market while Linux only… peanuts. And it is not so difficult to make Windows application (if you know Delphi 🙂 ). I think all the complicated steps below would have been eliminated with a Win app. Probably Anthropic is working on it….

Step 2. Install Claude Code

Time: 1h. Ease: 1/5 stars

The installation failed. It complained about permissions and other issues. The permissions were fixed it with these commands:

# Create a directory for global npm packages

mkdir -p ~/.npm-global

# Configure npm to use this directory

npm config set prefix '~/.npm-global'

# Add the bin directory to your PATH

echo 'export PATH=~/.npm-global/bin:$PATH' >> ~/.bashrc

# Reload your bash configuration

source ~/.bashrc

# Install Claude

npm install -g @anthropic-ai/claude-code

Claude.exe Note: Somebody mentioned in that forum that simply typing in the Powershell “irm https://claude.ai/install.ps1 | iex” will install Claude but it didn’t worked for me. So, I had to do it manually.

Step 3. Purchase a Pro plan

Time: 15 minutes. Ease:3/5

Guess what? There is no trial for Claude Code. So, after installing Claude Code it will ask you for a subscription. When you see this menu choose [1]:

Select login method: 1. Claude account with subscription · Pro, Max, Team, or Enterprise 2. Anthropic Console account · API usage billing

The Pro plan (the cheapest) is 21.5 euro (includes VAT), the Max is over 90 euro + 19% VAT.

Warning: This is a subscription! You will be charged monthly. So, don’t forget to cancel the subscription as soon as you created it! You can re-enable it later if it is useful to you.

Update: One week later: I realize that for the 15000 lines of code I tested Claude on, the Pro plan is enough for about 10-35 minutes, then I hit the limit and I have to wait for the reset (5 hours).

Update: Two weeks later: Don’t bother to buy the Pro plan unless you have a microscopic project. I had to upgrade to the Max plan (100euro + VAT).

Step 4. Setup Claude Code

Time: 50 minutes. Ease:3/5

At one point, Claude Code will try to open a browser but it will fail (I guess the WSL does not give permissions to execute the browser). You need to manually open a presented link in the browser and copy a “key” back into the Claude Code, to activate it.

Warning: Once I purchased the Pro plan I started to see my quota being used. It immediately jumped to 10% even though the Claude Code was not connected to yet to the Pro plan. I guess they connected the web chat bot to the Pro plan. This means that once you have a paid plan, the free chat bot is gone! They never mentioned that. Nasty!

Update: I just confirmed this.

Then I changed the default settings and created a skill file (basic instructions for the AI). You can use this one as template.

Type /config for a bunch of easy-to-access commands: Auto-compact true Show tips true Thinking mode true Prompt suggestions true Rewind code (checkpoints) true Verbose output false Terminal progress bar false Default permission mode Default Respect .gitignore in file picker true Auto-update channel stable Theme Light mode Notifications Auto Output style default Language Default (English) Editor mode normal Show code diff footer true Model Default (recommended) Auto-connect to IDE (external terminal) true Claude in Chrome enabled by default true

I highlighted the important ones.

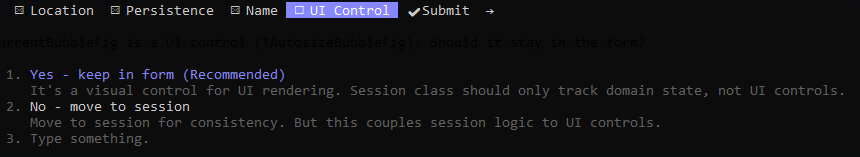

Model

I did all the tests under the Sonnet 4.5 model. The command is

/model ❯ 1. Default (recommended) ✔ Sonnet 4.5 · Best for everyday tasks 2. Opus Opus 4.5 · Most capable for complex work 3. Haiku Haiku 4.5 · Fastest for quick answers 4. Opus-Plan mode (my note: needs to activated manually)

Bug: the Opus-plan does not appear in the menu by default. It is a “GUI” bug introduced in v2.0. But the feature is working. Just type /model opusplan and the feature will appear. That plan will also not appear unless you purchased the Pro plan (or higher).

Of course it can also be added to ~/.claude/settings.json:

{"model": "opusplan"}

Update: OpusPlan seems to give worst results than the Default so I switched back.

Step 5. Connecting to a Dephi project

Time: 15 minutes. Ease:2/5

Finally, I reach the point where everything seems to be installed and setup properly. The Claude Code interface is a typical Linux “GUI interface”. The problem I had right from beginning is that important information is displayed outside the screen (I am using the default console settings here). One of the truncated strings was how to change the directory.

I gave this task only a 2/5 because I expected a menu/command that would say “Load project” or something like this. We are not in 1994 anymore 🙂 But maybe I need to invest some time and read its manual.

My project is in “c:\Projects\Envionera src\”, the command to open that folder is:

ls -la "/mnt/c/Projects/Envionera src/"

Simply letting the Claude look into that folder costed 2% of my credits.

Running the init command in that project folder to create a description of the project. Future sessions of Claude will read that file to figure out what this project is about. This is cheaper than reading all PAS files in that folder over and over again.

/init

The init command will create the “CLAUDE.md” file what you will have to review manually and improve it / fix the errors in it.

Update: I figured out that I don’t have to type that stupid linux command each time I start Claude. I just say: work on this project: c:\Projects\Envionera src\Claude.md”. That’s it.

Connecting to other folders

To connect Claude to external libraries like Light Saber (c:\Projects\LightSaber\) and AI Client (c:\Projects\LightSaber AI Client\) create a file “c:\Projects\Envionera src\.claude\settings.json” and put this in it:

{

"permissions": {

"additionalDirectories": [

"../../LightSaber",

"../../LightSaber AI Client"

]}}

Note: opening a project containing 15300 lines of code took about 5% of my session credits. So, it is better not to restart Claude every 15 minutes.

Useful commands

I created here a curated list of useful console commands:

Testing

First test

It is time to see what the AI thinks about the above project and based on that to see how to improve it. I will start with a small test and if everything seems fine, I plan to go big.

Prompt:

> Make a very short quality review. Is the code quality ok? Is the architecture ok?

Output:

Code Quality Review - Summary ✅ Strengths Architecture (Good) - Clean hierarchical data model with clear parent-child relationships - Well-separated concerns (UI, data model, AI integration) - Consistent serialization pattern across all classes - Logical flow: Setup → Generate Questions → Evaluate → Spaced Repetition Code Quality (Solid) - Proper memory management (FreeAndNil used throughout) - Consistent error handling with TRY/EXCEPT blocks - Type-safe enums and constants - Good use of Object Pascal idioms (list classes, destructors) ⚠️ Concerns Minor Issues - Global singleton (CoreObj) - acceptable for this app size but limits testability - AI prompts hardcoded in methods - should be externalized (already noted in TODOs) - ~10 TODOs scattered (minor features, not critical bugs) - Version migration not implemented (stream versions exist but no upgrade logic) Moderate Issues - Some tight coupling (e.g., Question knows about Section, Lesson, and Core) - Long methods in AI integration code (TLesson.PopulateSections ~400+ lines) - Limited unit testing infrastructure 📊 Overall Assessment Code Quality: 8/10 - Clean, maintainable Delphi code following good practices. Needs refactoring of AI integration methods and better separation of concerns. Architecture: 8.5/10 - Solid hierarchical design appropriate for the problem domain. The data model is intuitive and well-structured. Minor coupling issues don't significantly impact maintainability. Verdict: Production-ready for a prototype/MVP. The codebase is well-organized and maintainable. Main improvement areas: extract AI prompts to config, add unit tests, refactor long methods.

Can you say “early beta stage” ?

* You cannot scroll up in the console to see previous text while Claude is working. This is (I guess) because of the progress animation.

* Claude is too verbose and asks permissions for every single damn character written somewhere. When asked “Do you want to proceed? 1. Yes / 2. Yes, allow reading from xyz folder during this session” choose answer 2. This will give access to the whole folder (won’t ask similar question next time). But even so, it will still ask a lot of questions.

* Because of the above mentioned issue, you cannot let Claude Code just “go”. You need to stare at the screen and choose answers (1 or 2) all the time.

* For complex tasks Claude wants answers from me, but it does not show the question:  This (and other bugs) put the final nail in the coffin and my decision is final: Claude is still (early) beta.

This (and other bugs) put the final nail in the coffin and my decision is final: Claude is still (early) beta.

Update: Interrogating Claude’s settings I confirm that they provide a beta version as default download:

Switch to Stable Channel The stable channel may have an older version than what you're currently running (2.1.12). How would you like to handle this? 1. Allow possible downgrade to stable version ❯ 2. Stay on current version (2.1.12) until stable catches up

How good is Claude with Delphi?

Claude’s knowledge about Delphi is limited. Sometimes it doesn’t know basic stuff. I mean, really basic stuff like basic structure of a Delphi class.

* Claude lacks basic understanding of the Delphi language. Example (constant declaration above normal field):

TYPE TSessionNavigator = class(TObject) private CONST ClassSignature = 'TSessionNavigator'; FCurrFigure : Integer;

* Also it looks like Claude learned some bad habits from beginner Delphi programmers so I had to introduce this rule:

Never use "if MyObject = NIL then EXIT" when MyObject should never be nil! Instead use an assertion or exception and crash the program.

* Another example of beginner code (two lines instead of one):

Result:= FALSE; if NOT FProgress.Available then EXIT;

* The AI seems unsure of itself and leave traces of what it changed all over the place. Example:

// Extracted from FormComm.NextItem (line 205)

* Does not use proper components. Here is how dumb it can be.

Why did you use TEdit? Isn't there a dedicated search-box component in FMX? ❯ Regarding TSearchBox: While FMX does have a TSearchBox changing it would require more refactoring. what needs refactored? ❯ Actually, not much at all! I overstated it. Switching to TSearchBox would be simple...

The first big test

Now that the Clause passed the first test, I asked it to implement all ToDo’s it found in my code. Some are trivial, some are big. I created a backup to see all the changes it introduced.

It took over 12 minutes and 80% off the credits of the current session and 8% of weekly session.

The good:

- The AI took the liberty to add code for which there was no ToDo. For example, it found that I forgot to disable a button after the user clicked it. That’s nice.

- //ToDo 4: this needs to be placed in a thread because it locks the gui! – This was correctly implemented.

- In one place it moved the creation of a button from a child class into the parent class so the button could be available to other possible children. This is OK, though unnecessary because I don’t intend to create new children from that base class.

- It caught a bug where I typed by accident Opacity instead of LabelOpacity. Nice catch, though really minor.

- Some other minor fixes (one line of code or less).

The bad:

- In one place it replaced my Assert(Object<>nil) with “if object = nil then exit”. This is VERY bad, because that assertion was there because I had sometimes a nil object when it shouldn’t have been one (usually I try to avoid passing around nil objects). This change was plain dangerous (interfering with the debugging/stability of the program).

- In one place it grossly misunderstood the architecture of the program and simply set a property to True. That was totally wrong. If I wouldn’t caught that, it would have been 15 minutes of debugging.

- For some files the AI took the liberty to add code for which there was no ToDo. For example, it saved the position of a view port (TScrollBox) and restored it upon program restart. I have no interest in that. Plus, the code was bad (not compilable – WriteFloat() does not exist).

WriteFloat('frmScanEditor.ViewportX', ScrollBox.ViewportPosition.X);

The third test

Costs: This task costed 61% of my current session’s credits and it was done in about 25 minutes and I had to answer about 30 questions.

Productivity: 0% (at least it is not negative).

Since the previous (big) test failed, this is a small test, concentrated on a very specific task. I asked the AI to extract the business logic that I crammed in a form (for quick testing) from that form, and move it to a separate unit. It only involved 2 files. First I was welcomed by over 50 hints, warnings and errors. But then I realized that Claude forgot the add a unit to the uses clause. All errors were gone after fixing that.

Why 0% productivity: I think it took too much time for Claude to move some lines of code from one file in the other. In total it moved 400 lines. BUT NOW, I have to check if whatever it did, it did correctly.

This is a relatively small project (0.16 million lines) but with a complex architecture. I guess other people that brags that Claude is magic for them have simplistic projects.

Update: upon close inspection I realized that Claude did not removed all code from the form! So, i had to start a new task.

Update: I discovered unused code (created by the AI. So I had to start yet another task which resulted in:

Removed unused methods: - ✓ MoveToNextFigure - Declaration and implementation removed - ✓ MoveToNextSection - Declaration and implementation removed These methods were never called and added unnecessary complexity.

Update: The garbage never stops:

You're absolutely right - I implemented the Save/Load methods but never integrated them into the persistence flow. Let me fix this by integrating the session state persistence into TOscillator. First, let me read the TOscillator class to see where to add the integration...

The fourth test

At this point I have no doubt Clause is not yet ready to code generation (though works decent for bug finding and code review).

Since previous test failed because of the code complexity, I asked Claude to create me a brand new unit totally independent from the existing code. I asked it to create a class that takes a screenshot. I expected to have minimal amount of code into the form. All code should be in the class. Right? What Claude did? It mixed all the code with the GUI:

TScreenCaptureOverlay = class(TForm) private FScreenshot: FMX.Graphics.TBitmap; FSelectionRect: TRectF; FLastSelectionRect: TRectF; FStartPoint: TPointF; FMode: TScreenCaptureMode; FCapturedImages: TObjectList<FMX.Graphics.TBitmap>; FPaintBox: TPaintBox; FInstructionsLabel: TLabel; procedure CaptureScreen; procedure DrawOverlay(Sender: TObject; Canvas: TCanvas); procedure HandleKeyDown(Sender: TObject; var Key: Word; var KeyChar: Char; Shift: TShiftState); procedure HandleMouseDown(Sender: TObject; Button: TMouseButton; Shift: TShiftState; X, Y: Single); procedure HandleMouseMove(Sender: TObject; Shift: TShiftState; X, Y: Single); procedure HandleMouseUp(Sender: TObject; Button: TMouseButton; Shift: TShiftState; X, Y: Single); procedure CaptureSelectedArea; procedure UpdateInstructions; function IsCaptureKeyPressed(Key: Word; Shift: TShiftState): Boolean; procedure LoadLastSelection; procedure SaveLastSelection; public constructor Create(AOwner: TComponent); override; destructor Destroy; override; procedure StartCapture; function GetCapturedImages: TObjectList<FMX.Graphics.TBitmap>; end; // Main interface class TScreenCapture = class private FOverlay: TScreenCaptureOverlay; FOnComplete: TScreenCaptureCallback; public constructor Create; procedure Execute(OnComplete: TScreenCaptureCallback); end;

And before the code was functional I got:

You've hit your limit · resets 6pm Opening your options… What do you want to do? 1. Stop and wait for limit to reset 2. Upgrade your plan

More crappy code

Look at this crappy code:

procedure TMainForm.btnAddCategClick(Sender: TObject); VAR Category: TCategory; ItemCateg: TItemCategory; begin Category:= CoreObj.Categories.CreateNewCategory; ItemCateg:= TItemCategory.Create(TreeView, Category); // Create new treeview item for this category // Show category settings wizzard if CoreObj.Sw_AutoShowCategSettingsFrm then if NOT ItemCateg.ShowWizzard then begin // User pressed Cancel - remove the category ItemCateg.Free; CoreObj.Categories.Remove(Category); Category.Free; end; end;

Instead of:

procedure TMainForm.btnAddCategClick(Sender: TObject); begin if CoreObj.Sw_AutoShowCategSettingsFrm then TFrmCategory.CreateNewCategory(TreeView); end;

Update

So, I was using Claude Code for 2 weeks now. I also upgraded to the 100Euro plan because the Pro plan is crap.

I gave Claude lots of tasks and sometimes it performs decent other times it does total crap. Here is an example: I ask it to convert a combo box into a list box. It totally overengineered the code (which in the end did not even compiled).

Look at what it did:

Item.Data:= TValue.From<Integer>(i); // Store enum value

when it could have done something like this (better alternatives available also):

Item.Tag:= i;

Because of the above crap code it had to write 50 lines of code instead of 5. When confronted:

You’re absolutely right! I was overengineering this. Let me search for the proper way to store custom data with FMX ListBox items.

Up to this point

In general, the AI only implemented the trivial ToDo’s, took too many liberties and broke code. Lucky that I reviewed every change. It worked on this about 15 min, I wasted some 15 minutes to review the changes, plus the cost for the tokens. I could have done the same progress in less then 15 minutes AND without paying for tokens.

Intelligence

From the “intelligence” point of view, Claude seems on par with Google’s Gemini that I used a lot lately (and with any other “big” LLM outhere).

Laziness

Honestly, Claude seems to me lazy because it picked up the easiest ToDo’s (11 of them). It total it changed about 8 lines of code and added another 12 (and moved a button from the child class to the parent). Additionally, it introduced 2 massive errors. Also it ignored all ToDo 5 tasks because they are, I quote: “Low priority UI enhancements”. WTF? So, they are not worthy for your time and leave it to the human developer? Fuck you Claude!

All the difficult tasks that would require a full day per task (for me) were avoided. And if the AI wasted so many credits on 20 miles of code and 15 minutes, I think it will require 24h to implement those tasks.

Laziness was so typical for first-generation LLMs, but you can still see it sometimes today. I guess, you I run the prompt again, it might implement all ToDo’s it just needs to be nudged a bit.

Boldness

On the other hand, Claude took too much liberty and changed the code in many places, where there was no need/request for it. In other places it introduced bugs. Therefore, don’t you dare to let changes done by the AI(s) unsupervised. Use Beyond Compare to track all changes! 🙂

Size matters

Until now, nobody else reported the exact size of the project but all tests on that forum seems to be on tiny projects.

In my case, I think Claude failed because because I tried it on a small-to-medium size project. It might work for very small projects (under 50000-60000 lines).

Anything (at all) positive from all this thing?

It is much easier to work with Claude Code because you don’t have to copy/paste code into the chat box in the browser. I do see its benefits, and I think it will work nice if asked to do some very specific tasks on small projects. Claude can also compile the project (see below). This is also useful.

But per total, test over test showed that Claude dramatically decreased my productivity. It works (sometimes) for small projects but it chokes on big ones. In the end (as we already know) the programmer has to clean up its slurp.

BUT I see its potential. At this stage I think it can be used for code review and bug hunting but not for hands-on programming – unless you are a beginner and the AI knows the language better than you.

And for people saying “You need to understand its limitations” they mean “it can’t do a lot for you” so I agree with them.

A word of warning

ALWAYS check every single line of code that Claude changed! After it does changes, I compare in Total Commander (it only needs 3 key strokes for that) the current folder that contains Claude’s changes with a backup folder.

However, this method is not bullet proof an it lead to many wasted hours of debugging and more AI prompting (see the case above where the AI was supposed to move some code from a class to a new class but it forgot to delete the code from the original class so it kept updating duplicate code, in two classes in parallel). Because it forgot to move the code, the diff view showed no differences. If I would have read both files line by line (15 minutes) I would have understood what the AI failed to do and why the diff failed. Now I know that diff is not infallible.

_______________________

Safety

ToDo: How safe is Claude Code? Can it delete my entire drive accidentally or only files in the allowed folders?

ToDo: Is Antropic training the AI on my code? Confirm this. I heard it is enabled by default so I need to disable it!

Pingback: Useful console commands for Claude Code – Delphi, in all its glory

Pingback: Connecting Claude to Delphi – Delphi, in all its glory